Collaborative Academic Project Oct 2023 to Dec 2023 Teammate: Carly Lave, Sky Araki-Russel Instructor: Panagiotis Michalatos

1. Concept

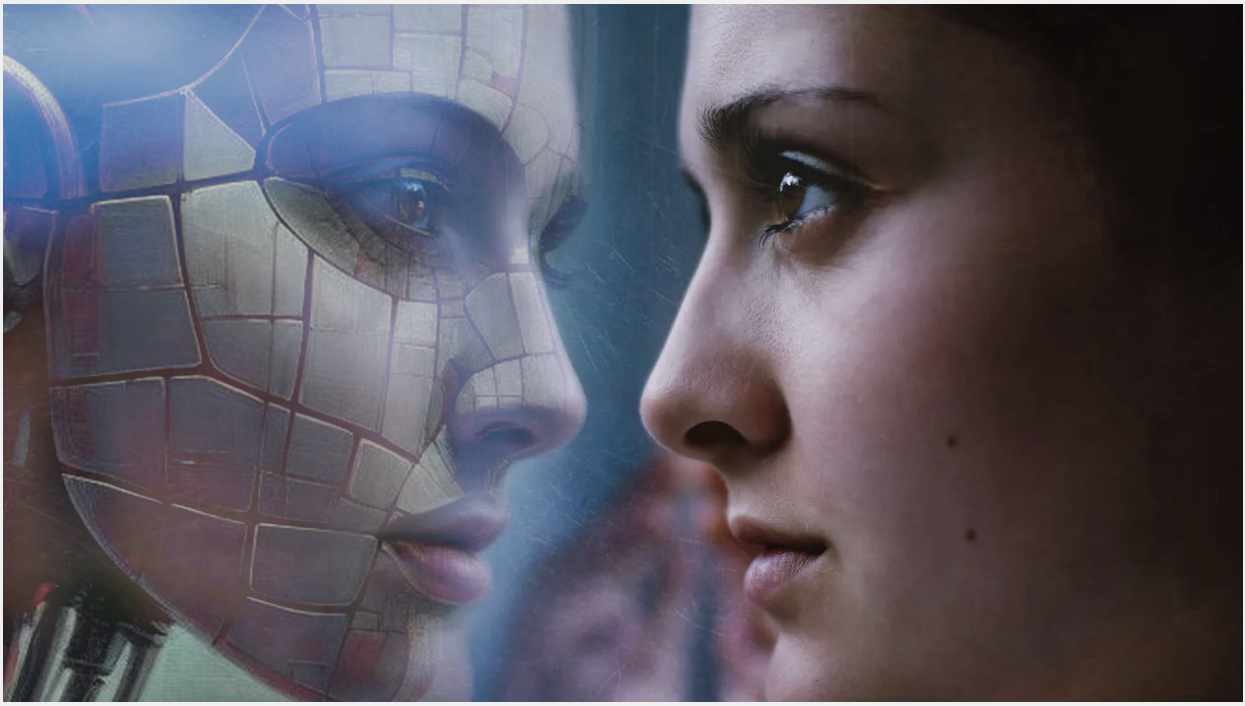

"Narcissus and the Machine" is an interactive art installation that creates a dialogue between humans and artificial intelligence (AI) not through the medium of language, but through the language of movement. This project is born from a provocative inquiry: if AI has its own consciousness, how would it perceive humans? By envisioning AI as a "mirror", can we gain a deeper insight into our own selves by observing our "reflections" through the AI's perspective? In response, we have breathed life into such an AI entity. Though in its nascent stage, this AI can observe human movements and translate them into various water motion expressions, encompassing the majesty of ocean waves, the descent of waterfalls, the gentle pattern of rainfall, and the serene spread of ripples.

Water mirrors the reflection of the individual gazing upon its surface. The AI-powered machine is able to do the same work, which explains the reason why we use water as the main element of our project. The project draws inspiration from the myth of Narcissus, riffing on the title’s canonical fascination with appearance, reflection, and embodiment through water.

2. Model Training

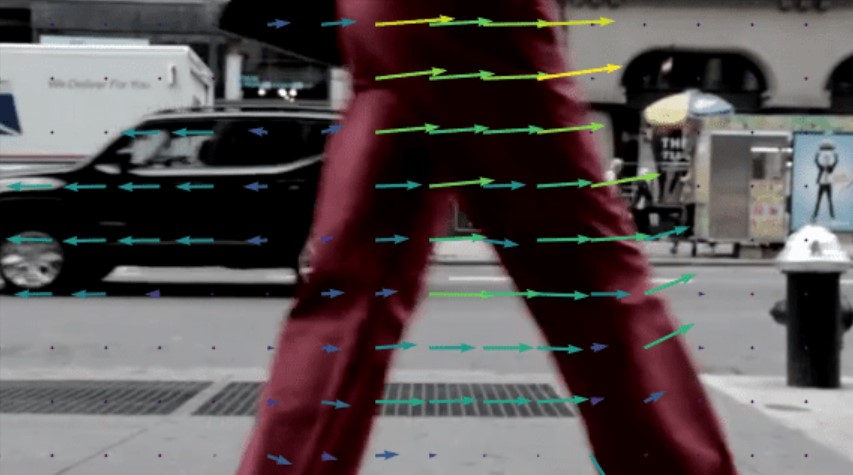

Optical flow is a task of per-pixel motion estimation between two consecutive frames in one video. Basically, the Optical Flow task implies the calculation of the shift vector for pixel as an object displacement difference between two neighboring images. The main idea of Optical Flow is to estimate the object’s displacement vector caused by it’s motion or camera movements.[1]

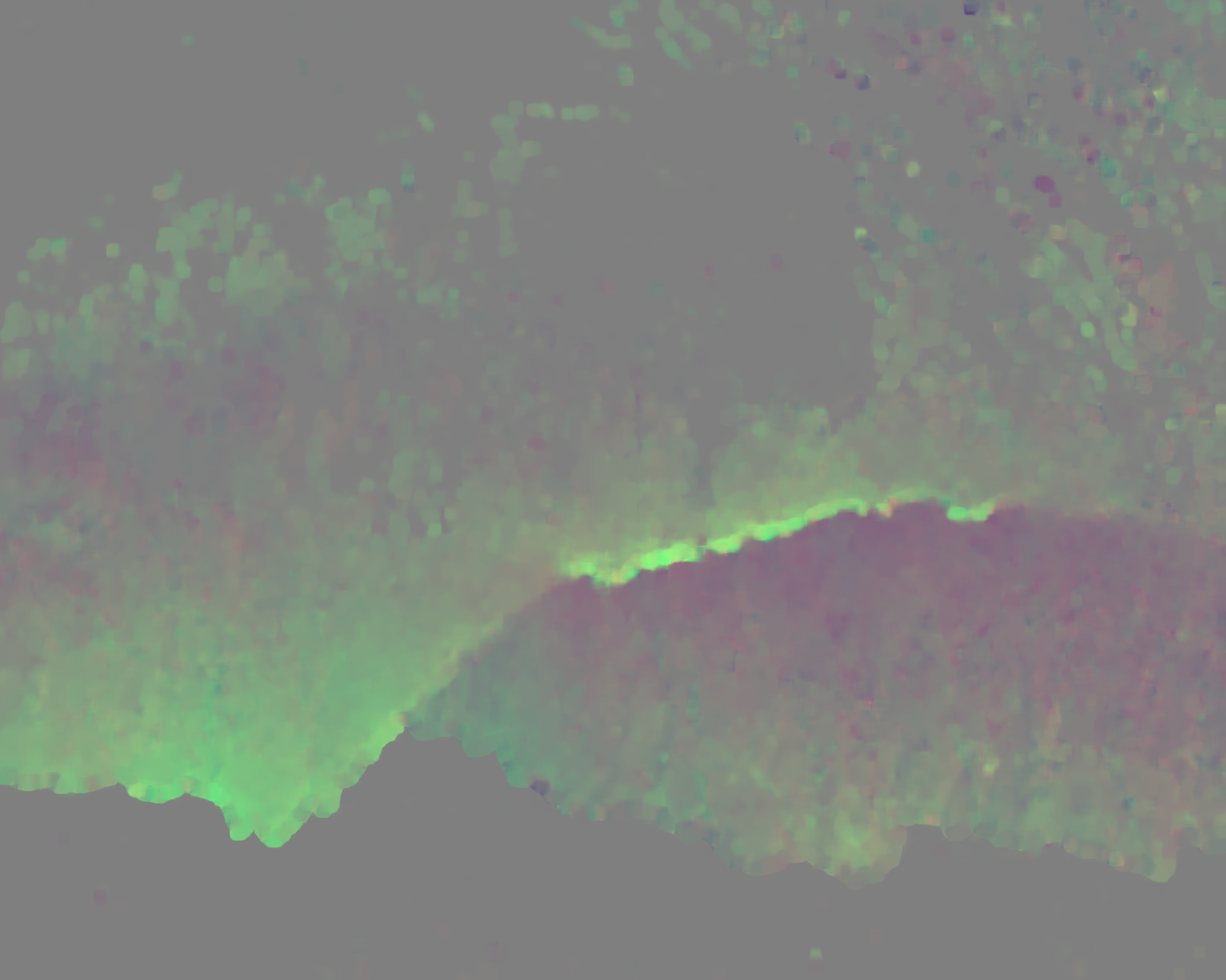

For each category of water movements, we curated one or two videos and analyzed them using Optical Flow in OpenCV (Python). This analysis allows us to determine the length and direction of an object's movement between consecutive frames by the color of a pixel:

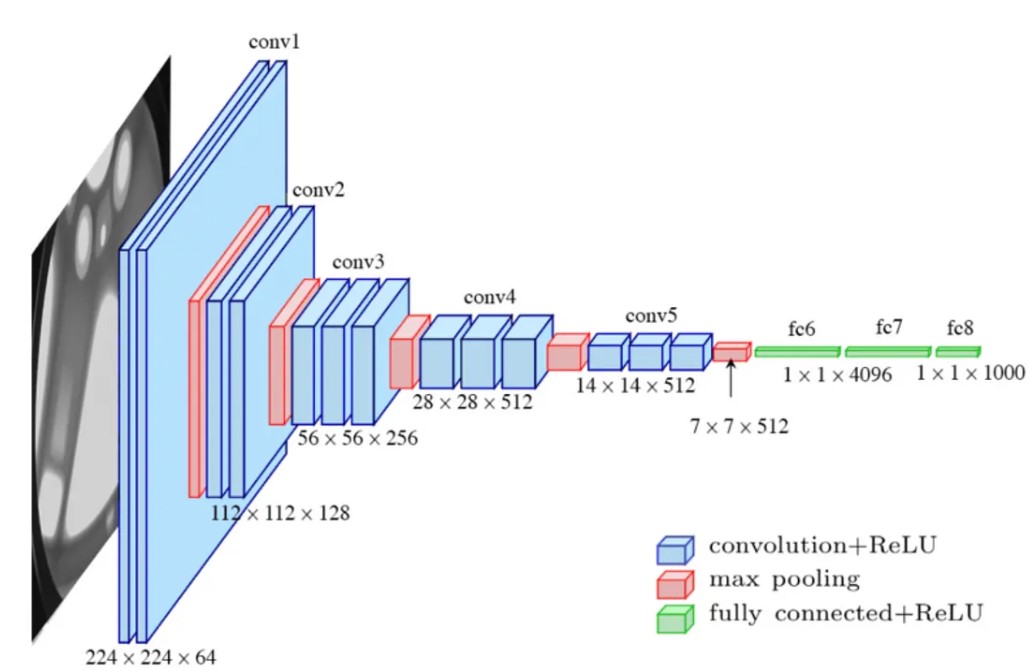

We trained a VGG model on this dataset for 99 epochs, achieving an accuracy of 0.9753 and a loss of 0.0890 on the training set. When tested on a test set of 356 images, the model achieved 100% accuracy in classification.

3. Real-time Classifier

Utilizing Optical Flow in OpenCV (Python), we were able to convert each webcam frame into a color map, mirroring the approach taken during the training process. This enabled us to prompt the model to classify each frame accordingly. For instance, a frame capturing a person with their arms open and waving could be classified under the category of ocean waves, as it bears the closest resemblance to the images within that class.

4. Animation

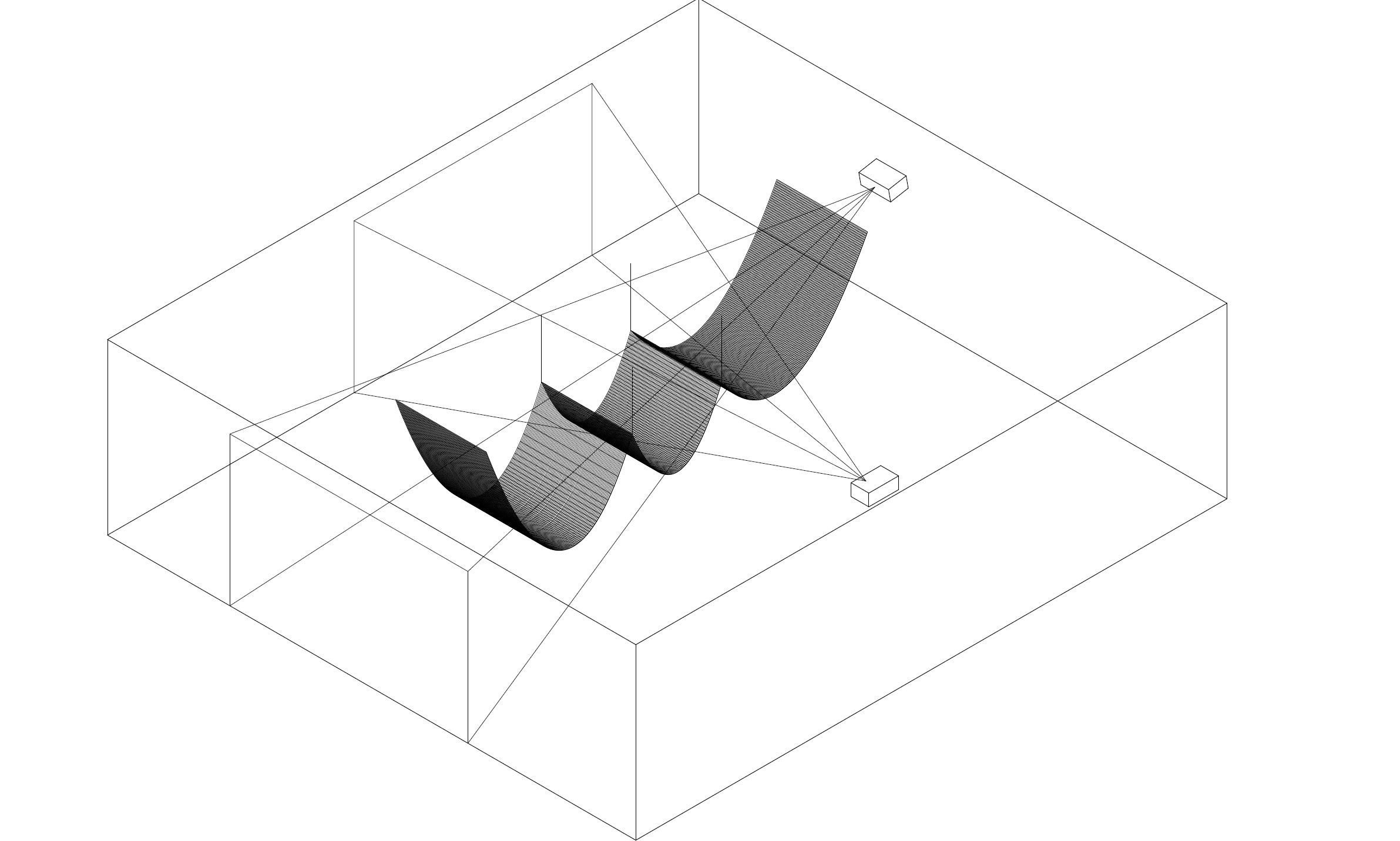

We aimed to generate artistic animations of water that correspond to the outcomes of the real-time classifier. For instance, if the movements of an audience member are identified as resembling an "ocean wave," we anticipate the animation will display patterns of ocean waves. If the movements shift and are recognized as a different type of water motion, we likewise expect the animation to transition smoothly to reflect this change.

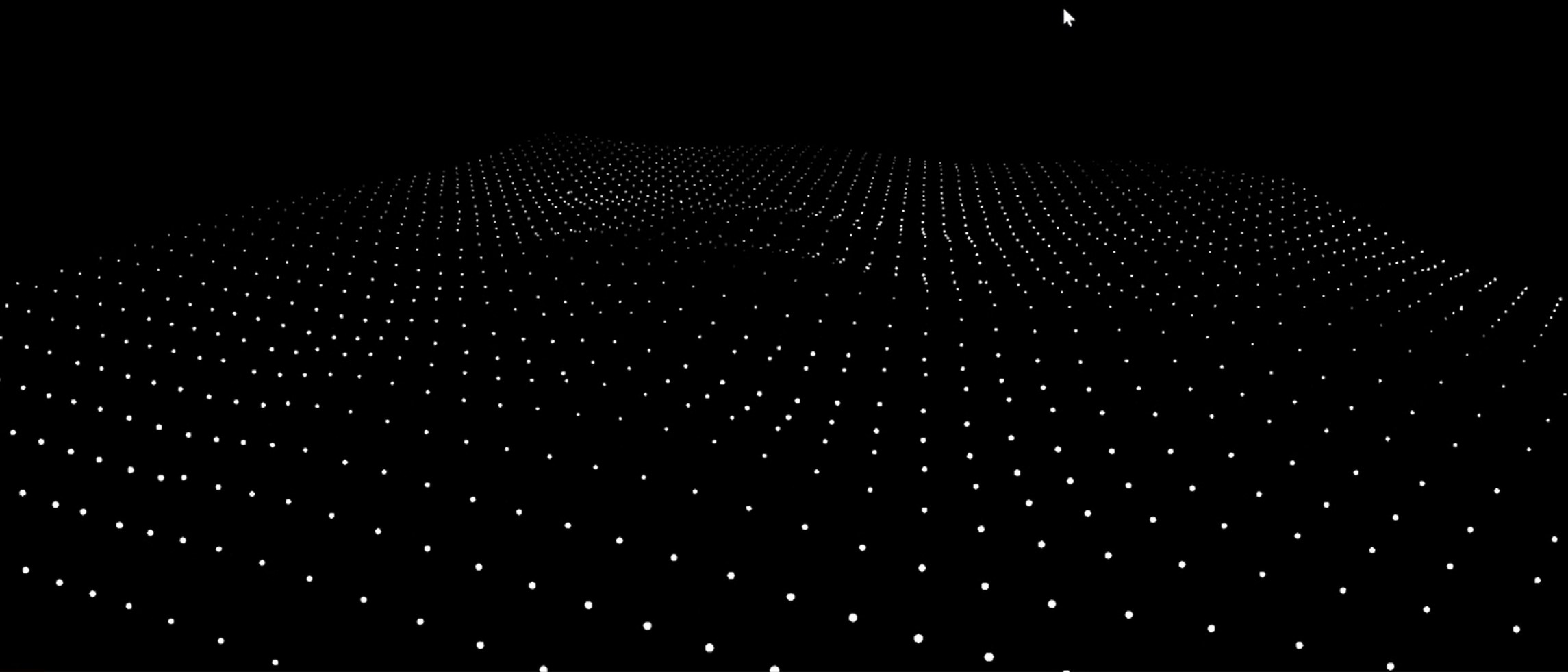

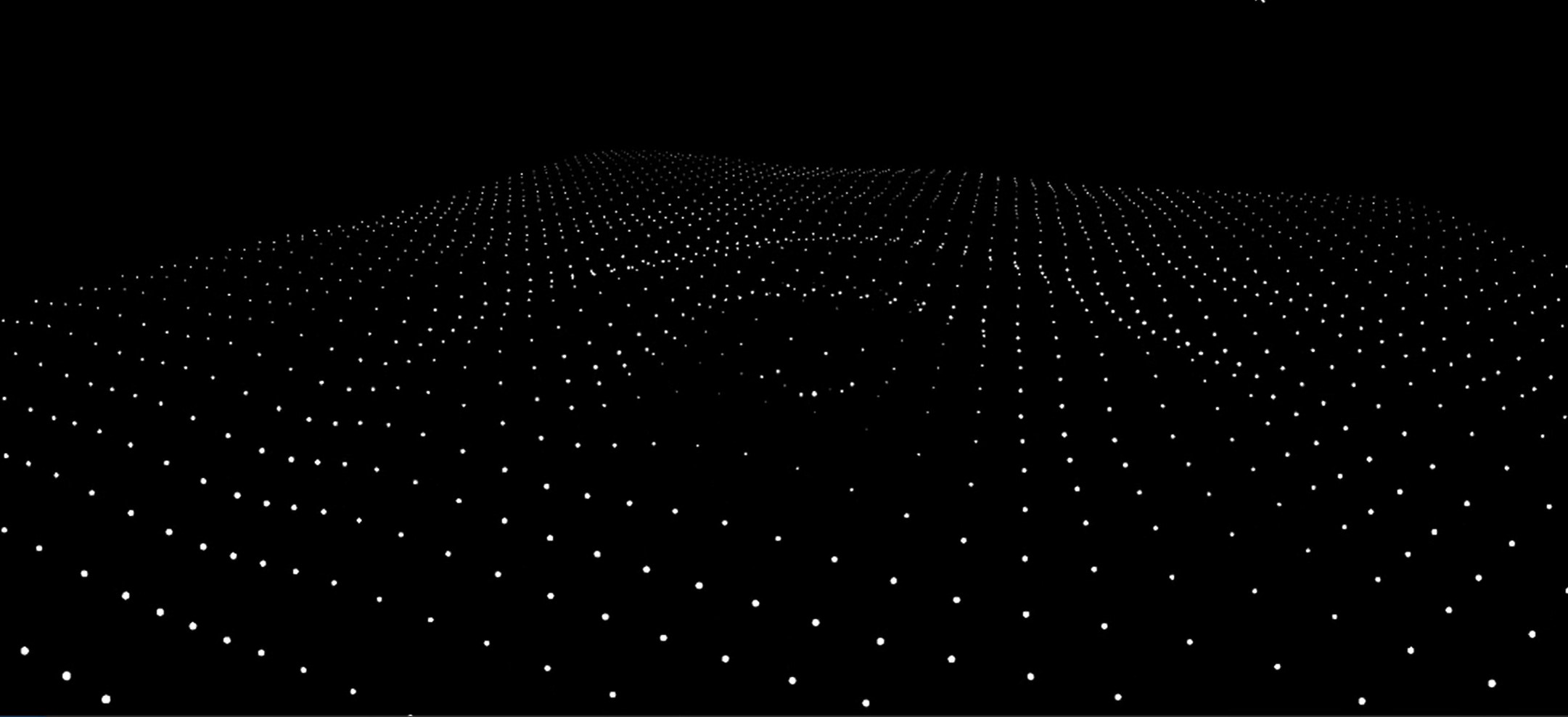

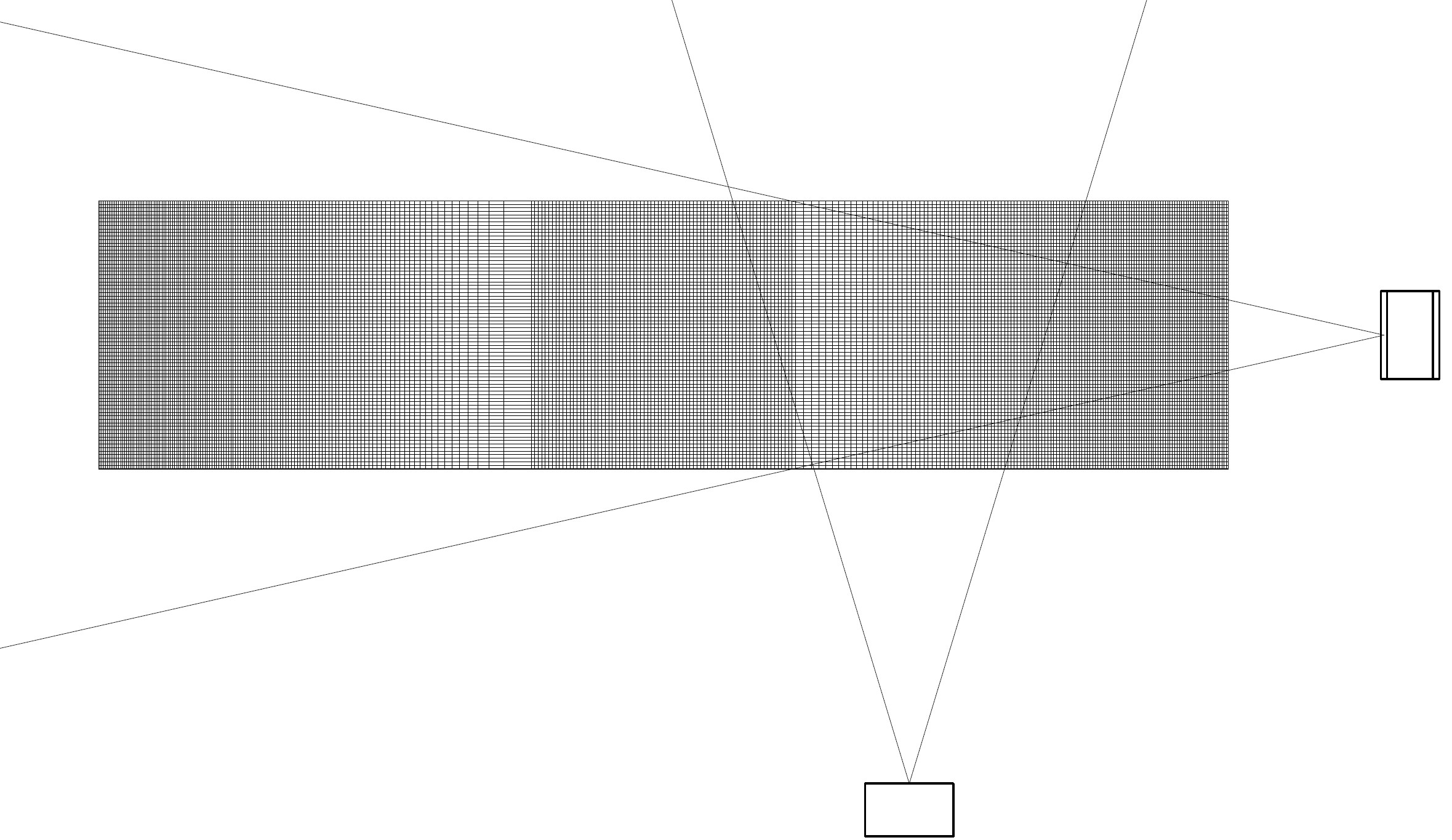

We designed a 50x50 grid, where each cell features a white dot. By parametrically adjusting the vertical movements of these dots, we created movement patterns that simulate various types of water motion. The final challenge was seamlessly integrating the animation on the front-end server with the classifier on the back-end server. Overcoming the inherent time delays in data transmission was a complex task. Fortunately, WebSocket technology enabled us to significantly reduce this asynchrony. Establishing a bidirectional communication pathway between the front-end and back-end using WebSocket allowed the front-end to continuously listen to data from the back-end, and vice versa. This method proved to be more efficient than traditional HTTP approaches for real-time data exchange.

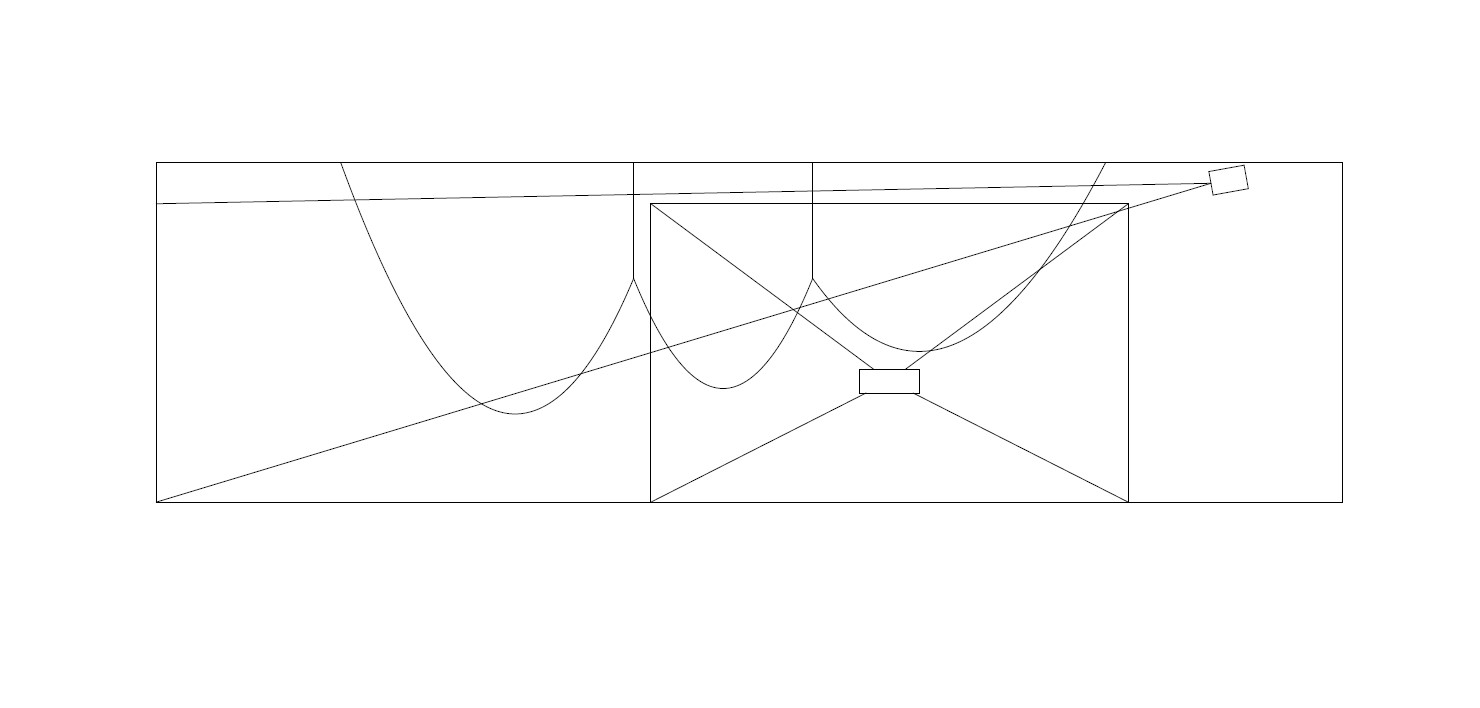

5. Built Environment and Feedback Loop

The installation was configured in a dark space in the school building, featuring two intersecting projections and a white tulle dominating the center of the space. A projector was suspended from the ceiling at the rear, while the other was stationed on a table to one side. This arrangement facilitated an interplay of light upon the tulle, creating a dynamic pattern of illumination that penetrated the semi-transparent fabric and illuminated the opposite walls. This setup created a highly immersive experience for participants, allowing for an interactive engagement with the space. In addition to human body movements, the projected animation was also captured by the webcam, consequently augmenting the subsequent animation. This process enriched the animation, leading to a cyclical interaction wherein participants, often subconsciously, adapted their movements in response to the visual stimuli. Consequently, a feedback loop emerged, illustrating a dynamic interplay between human participants and the technological components of the installation. A notable observation was the participants' engagement with their shadows. Any movement close to the projector was significantly magnified, creating an enlarged silhouette on the walls. These silhouettes, once detected by the webcam, promptly influenced the subsequent animation. This feature was particularly well-received, allowing participants to experiment with their body movements in front of the projector and observe the resultant effects on the animation.